AI is all the rage right now. And it is here to stay. Yet, almost all the example you see out there are in Python.

I like Python, but I love Go. Let’s use Go.

How do we do AI?

Running AI on a CPU is painfully slow. Running AI models on a GPU is much faster, although not instantaneous.

There is the hard way. And then there is the easy way.

The hard way is buying multiple powerful GPU machines and ship them to a server center. Or you can rent those machines from AWS, Google or Microsoft. Either of these options is expensive.

A better way is to just use an API, not thinking about servers at all. Maybe OpenAI comes first to your mind, but it has only their own models and it is not that cheap when you really start scaling once your app gets popular.

Instead, let’s use DeepInfra. They have a lot of models that you can choose from. You can access them from any language, and they are not expensive to run. No servers. Just an API. This is the easy way.

What’s more, with DeepInfra you don’t even need an account initially. You can start for free. You just call their API, but there is a rate limit. Once you need to do something more serious or go into production, you then create an account pay just for what you use.

The App

Today we are gonna build an app using Go with some AI services add to it for good taste.

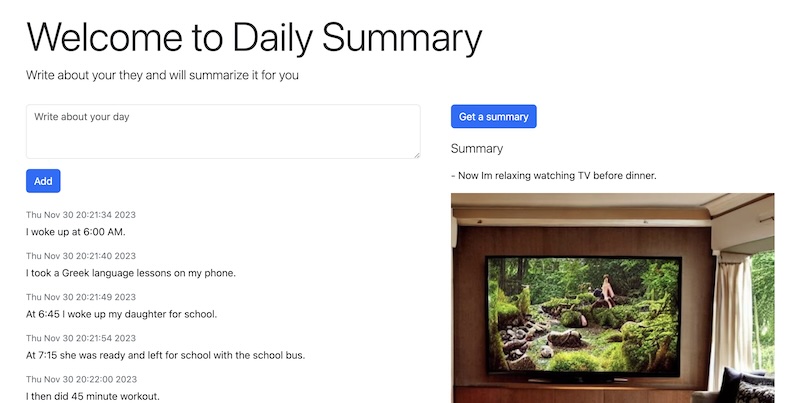

The app will be simple. You will log what happens through out the day in it. Then with a single click you can get a summary of your day. One more click and you can get a picture based on that summary.

How does that sound to you?

This is how the app will look at the end. I will give you the entire code on GitHub.

The Basics

Let’s begin with a standard production ready server.

func main() {

log.Println("server starting ...")

router := NewRouter()

srv := NewServer(router)

go func() {

log.Fatal(srv.ListenAndServe())

}()

// Interrupt on SIGINT (Ctrl+C)

c := make(chan os.Signal, 1)

signal.Notify(c, os.Interrupt)

<-c

// Shutdown gracefully but wait at most 15s

ctx, cancel := context.WithTimeout(context.Background(), time.Second*15)

defer cancel()

srv.Shutdown(ctx)

log.Println("shutting down")

}

func NewServer(router http.Handler) *http.Server {

return &http.Server{

Addr: ":8000",

ReadTimeout: 5 * time.Second,

WriteTimeout: 10 * time.Second,

IdleTimeout: 120 * time.Second,

Handler: router,

TLSConfig: &tls.Config{

MinVersion: tls.VersionTLS12,

PreferServerCipherSuites: true,

CurvePreferences: []tls.CurveID{

tls.CurveP256,

tls.X25519,

},

CipherSuites: []uint16{

tls.TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,

tls.TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,

tls.TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,

tls.TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,

tls.TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,

tls.TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,

},

},

}

}

Nothing unusual here. Let’s see next what our router will be like

func NewRouter() http.Handler {

r := http.NewServeMux()

r.HandleFunc("/", HomeHandler)

r.HandleFunc("/add", AddHandler)

r.HandleFunc("/remove", RemoveHandler)

r.HandleFunc("/summary", SummaryHandler)

r.HandleFunc("/image", ImageHandler)

return r

}

func HomeHandler(w http.ResponseWriter, r *http.Request) {

if r.Method != http.MethodGet {

w.WriteHeader(http.StatusMethodNotAllowed)

return

}

context := struct{ Entries Entries }{AllEntries()}

template.Must(template.ParseFiles("views/index.html")).Execute(w, context)

}

...

Again rather simple. There are 5 handlers.

- HomeHandler - serves the main page

- AddHandler - adds a new entry to what you’ve done today

- RemoveHandler - removes such an entry

- SummaryHandler - generates a summary using AI from your entries.

- ImageHandler - generates an image out of the summary using AI

I also used this opportunity to play with Turbo. This JS library comes from the Rails world but can now be used everywhere. It makes adding dynamic and smooth changes to your app very easy, without writing any JavaScript. You server responds with HTML and the library figures out how to merge the pages or only portion of the pages.

Preparing to work with an API

Using DeepInfra’s API is just an http request. Let’s create a little function to help us with this common task.

func Request(url string, data any) []byte {

dataJSON, err := json.Marshal(data)

if err != nil {

log.Fatal(err)

}

req, err := http.NewRequest("POST", url, bytes.NewReader(dataJSON))

if err != nil {

log.Fatal(err)

}

req.Header.Add("Content-Type", "application/json")

// add authorization header to the req

// req.Header.Add("Authorization", "Bearer <token>")

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

log.Fatal(err)

}

defer resp.Body.Close()

if resp.StatusCode != http.StatusOK {

log.Fatal("Response status not OK. ", resp.StatusCode)

}

body, err := io.ReadAll(resp.Body)

if err != nil {

log.Fatal(err)

}

return body

}

The function takes a structure, then turns into a JSON, then makes a request and on success returns the whole body as bytes.

Maybe you’ve noticed that I’ve commented the following lines

// add authorization header to the req

// req.Header.Add("Authorization", "Bearer <token>")

Once you want to go into production and use DeepInfra’s API for more than just experiments (or writing articles), in your account you will ge a token, which you have to use here.

Let’s chat

To create our summary I will use a chat model. I’ve decided on the popular Llama 2 7b chat model. You can try another one, just change the link to the model and maybe the response structure.

func ChatInference(input string) string {

data := struct {

Input string `json:"input"`

}{input}

body := Request("https://api.deepinfra.com/v1/inference/meta-llama/Llama-2-7b-chat-hf", data)

var response struct {

RequestID string `json:"request_id"`

TokensCount int `json:"num_tokens"`

InputTokensCount int `json:"num_input_tokens"`

Results []struct {

Text string `json:"generated_text"`

} `json:"results"`

Status struct {

Status string `json:"status"`

Duration int64 `json:"runtime_ms"`

Cost float64 `json:"cost"`

TokensGenerated int `json:"tokens_generated"`

TokensInput int `json:"tokens_input"`

} `json:"inference_status"`

}

err := json.Unmarshal(body, &response)

if err != nil {

log.Fatal(err)

}

if response.Status.Status != "succeeded" {

log.Println(response)

log.Fatal("Inference status not succeeded. ", response.Status.Status)

} else if len(response.Results) == 0 {

log.Println(response)

log.Fatal("No results generated.")

}

return response.Results[0].Text

}

It’s not complicated. The function has a single text input, the instruction that you give to the model. Then you get the first result and this is what it is returned.

You make a request and you get some JSON in return. You do some basic checks and that’s it.

But what is our input?

I am glad you asked.

func Summarize(entries []string) string {

var input string

input += "Write a positive summary of my day based only on the following events that happened to me:\n"

for _, entry := range entries {

input += entry + "\n"

}

return ChatInference(input)

}

This is the essence, some very basic prompt engineering. We use some predefined instructions and combine them with all the entries of what happened in our day, and then ask the model for a response. This is it.

Of course, you can play with the instructions to get different and better results. It can be a lot of fun or frustrating.

In addition, you also have to add some security measures so that the model is doing only what you’ve intended to be doing, creating a summary in this case, but these are out of the scope of the article.

Everybody loves a good image

We have the main functionality of the app. The next step is to generate an image out of the summary.

We are going to use the Stable Diffusion v1.5 model

func ImageInference(prompt string) string {

data := struct {

Prompt string `json:"prompt"`

}{prompt}

body := Request("https://api.deepinfra.com/v1/inference/runwayml/stable-diffusion-v1-5", data)

var response struct {

RequestID string `json:"request_id"`

Images []string `json:"images"`

NSFWContentDetected []bool `json:"nsfw_content_detected"`

Seed int64 `json:"seed"`

Status struct {

Status string `json:"status"`

Duration int64 `json:"runtime_ms"`

Cost float64 `json:"cost"`

} `json:"inference_status"`

}

err := json.Unmarshal(body, &response)

if err != nil {

log.Fatal(err)

}

if response.Status.Status != "succeeded" {

log.Println(response)

log.Fatal("Inference status not succeeded. ", response.Status.Status)

} else if len(response.Images) == 0 {

log.Println(response)

log.Fatal("No images generated.")

}

return response.Images[0]

}

This is very similar to what we did above. Again you can try different models and see what happens. We make a json request and then we get an image, a base64 encoded image.

Now let’s have a look what we are actually going to ask the image generation model.

func SummaryImage(summary string) string {

return ImageInference(summary + ", photorealistic, fantasy")

}

To the summary I added only two more details to get the image, but you can get very creative when writing the prompts.

And this is it. You can check the whole code on GitHub. Then just do go run . and you can play with it.

As you see it is very simple, and you also have endless opportunities to make it better.

Next

I just showed you how to quickly add AI to your app. Now you can experiment.

You can start with altering the prompts to get the results that you want. What’s more every model accepts not only a prompt but many more parameters with which you can play with to improve even further your results. If this is not enough for you, you can try another model. DeepInfra hosts a lot of them that you can choose from. Just try another one.

Once you are happy with the results, you have to add some security measures. You don’t want the AI to be overwhelmed or used for cases you didn’t intended to.

Finally, register on DeepInfra and get your production key and deploy your app to the millions of users eagerly awaiting it.